Defined research approach with design team

Designed, built, distributed survey to learn more about our users

Designed multiple study plans, conducted internal interviews, built service maps, created a “happy path” service journey

Re-defined personas into more flexible “User Mindsets”

Designed and facilitated cross-functional workshops

Co-presented findings and approach to product leadership for buy-in

Designed, facilitated, analyzed, and reported findings for a usability evaluation

Built a database of opted-in participants so the team would have easier recruitment for future research needs

DURATION | ~6 months

IMPACT |

Improved Data Ingest workflow for AWS sources.

Set of design principles to guide future Data Ingest design work.

Participant database for Data Ingest team to tap into for future research.

Hackathon project won an award & inspired future Data Ingest / dashboard creation work.

Mindsets adopted by other product teams.

DIRECT STAKEHOLDERS | Design Manager, Principal Product Designer, Sr. Product Designer, Technical Product Manager

METHODOLOGIES | Survey, Internal Interviews, Service Mapping, Usability Evaluation, Video Prototype

Hackathon video I created as a result of this project (more details below). If the video doesn’t load, click here.

background & problem space

In order to use a data analysis tool like Splunk, users first have to get data into the tool. At Splunk, we call this Getting Data In (GDI). Every Splunk product shares a complex and often daunting GDI journey. A team of designers (and myself) was assembled to assess and re-think this complex journey in a way that could be used across each of our products: Unified GDI.

With 16+ PM stakeholders and hundreds of varying GDI journeys, we set out to simplify this experience for our users.

research questions

Evaluative (Phase 2):

Does our new GDI journey improve the user experience for this specific AWS data source?

What are tactical design principles that we should follow while ushering users along our new GDI journey?

What are the biggest usability issues we need to address?

Foundational (Phase 1) - before any designs were created:

Who are we building the GDI journey for? Which personas?

What services does Splunk offer for the GDI journey, and how do users interact with these services?

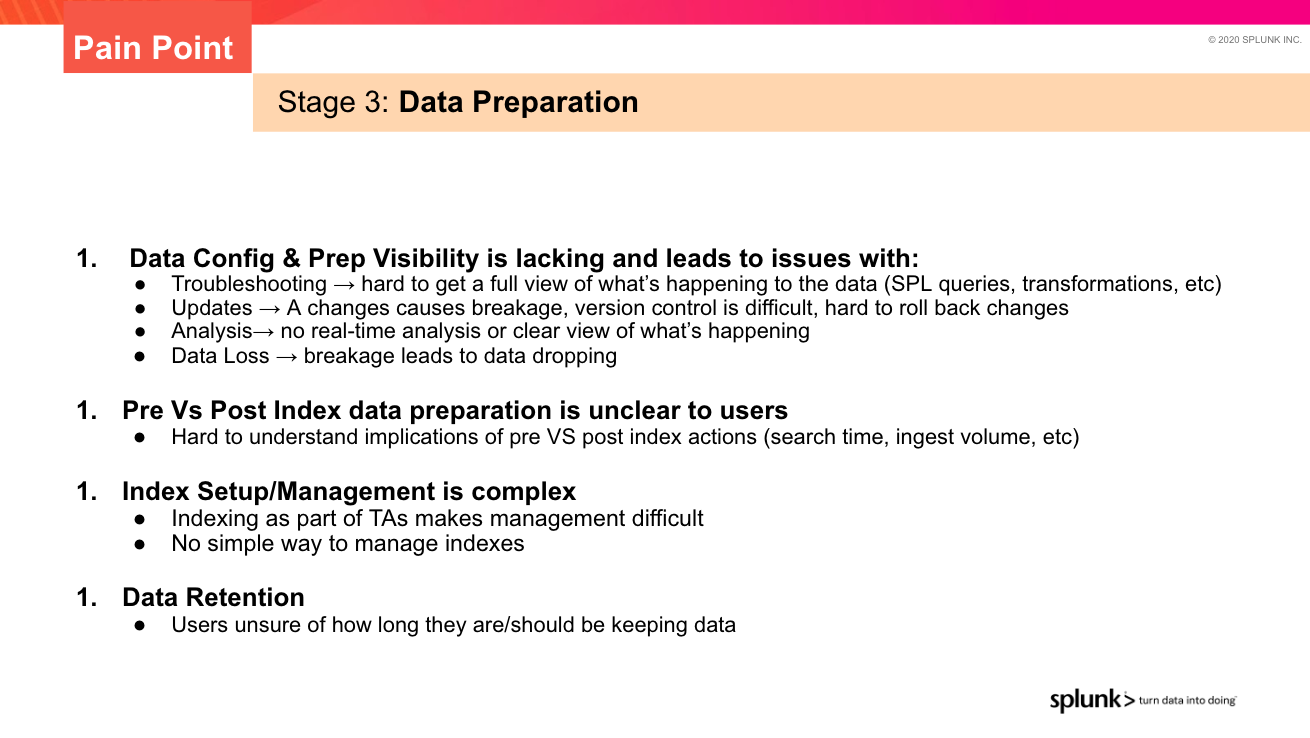

What are the biggest pain points in the current GDI journey, and how can we solve for them?

methods

1. survey | understand our users & build a database

From our database of ~8,000 Splunk users/research participants, we surveyed the ~350 users who matched our criteria.

Of those, 34 responded. While not statistically significant, it gave us a pool of administrators to reach out to when it came time for evaluative research.

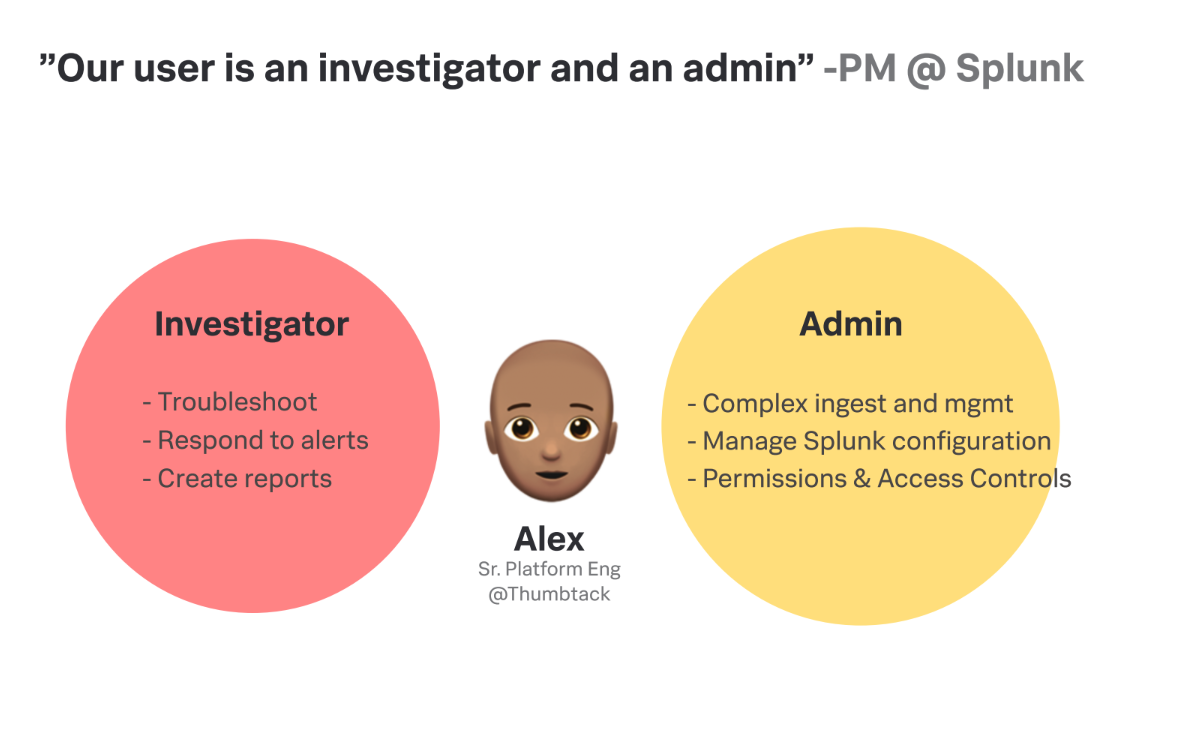

The data allowed us to better understand how personas translated into “mindsets,” with findings such as 96% of Data Admins consider themselves System Admins (both Data Admin and System Admin were considered different personas at the time).

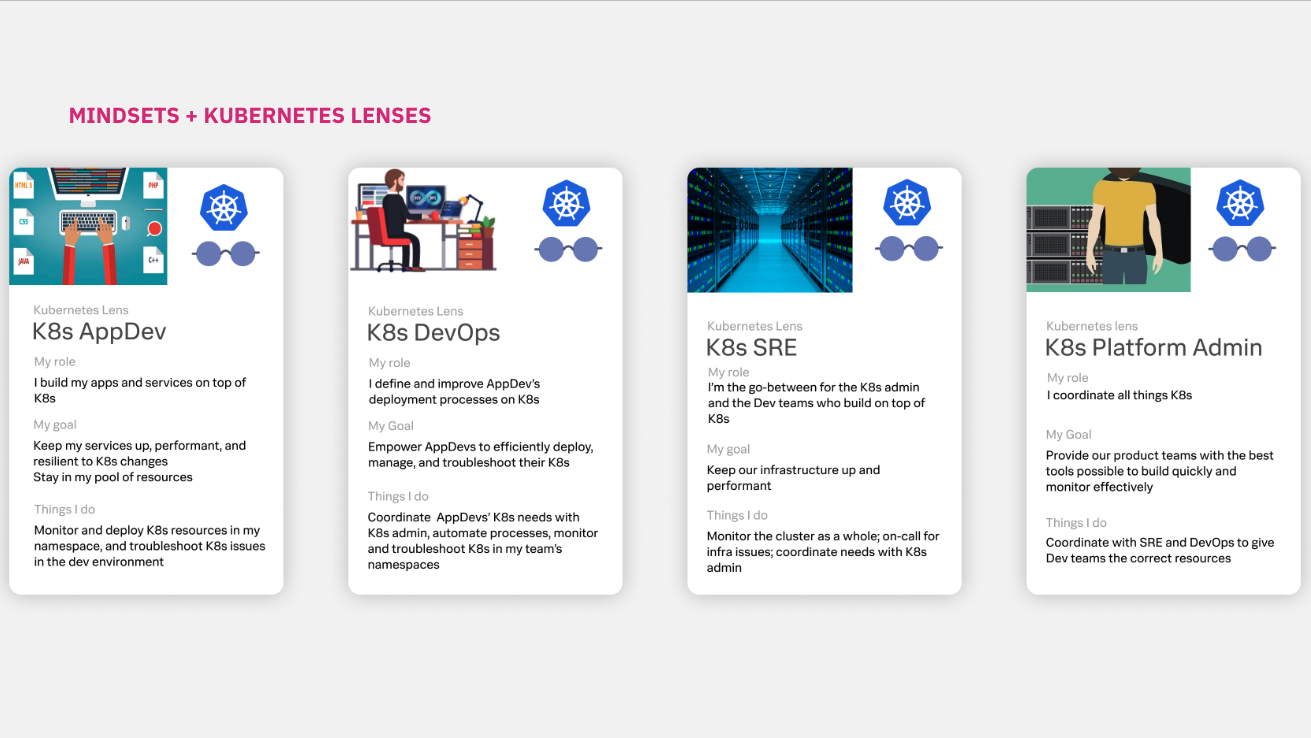

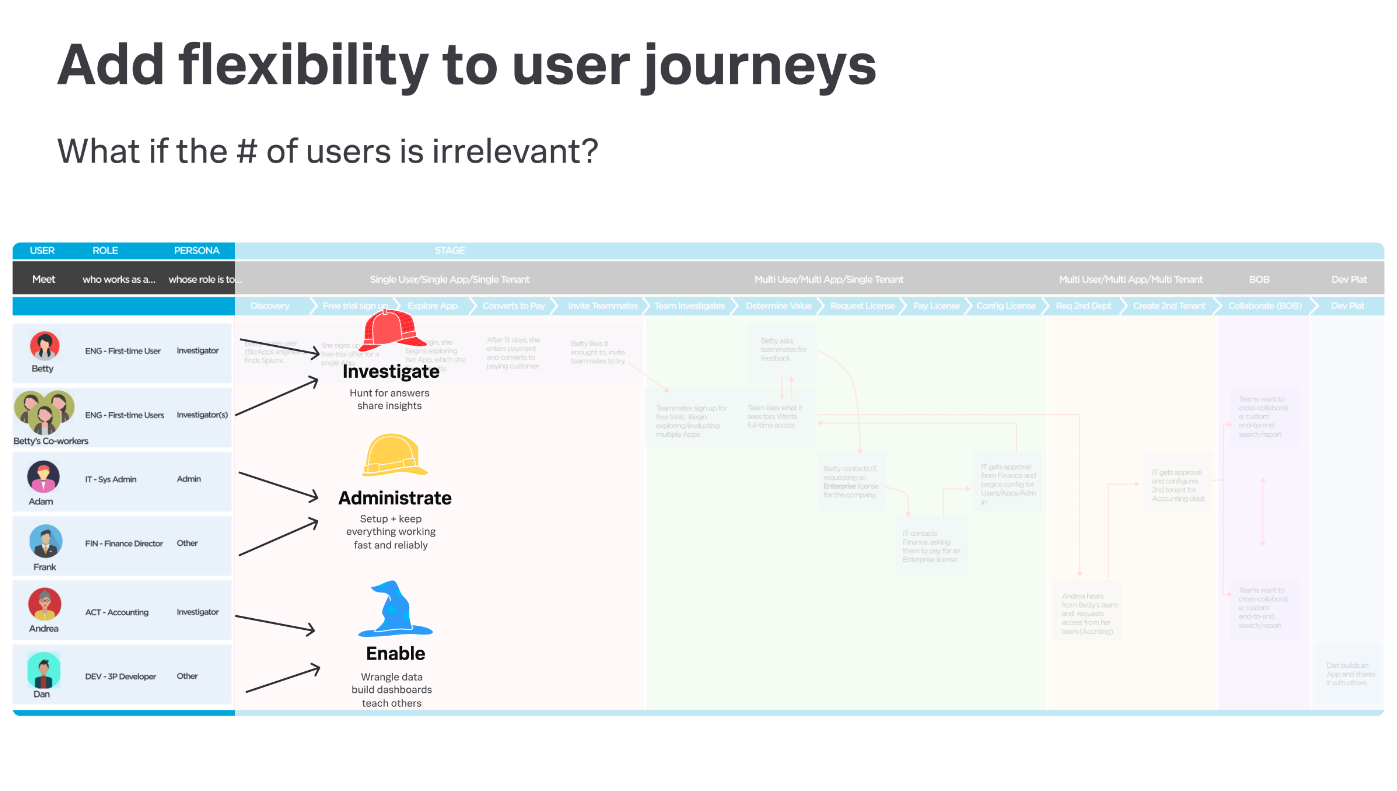

2. turning personas into x-product mindsets

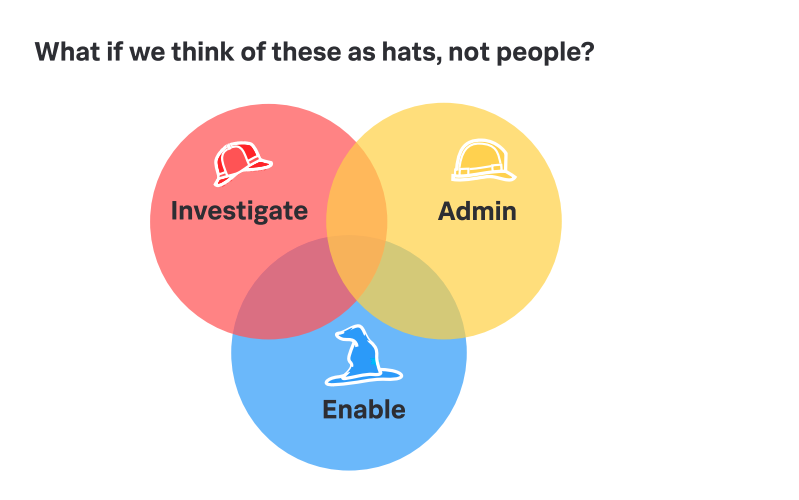

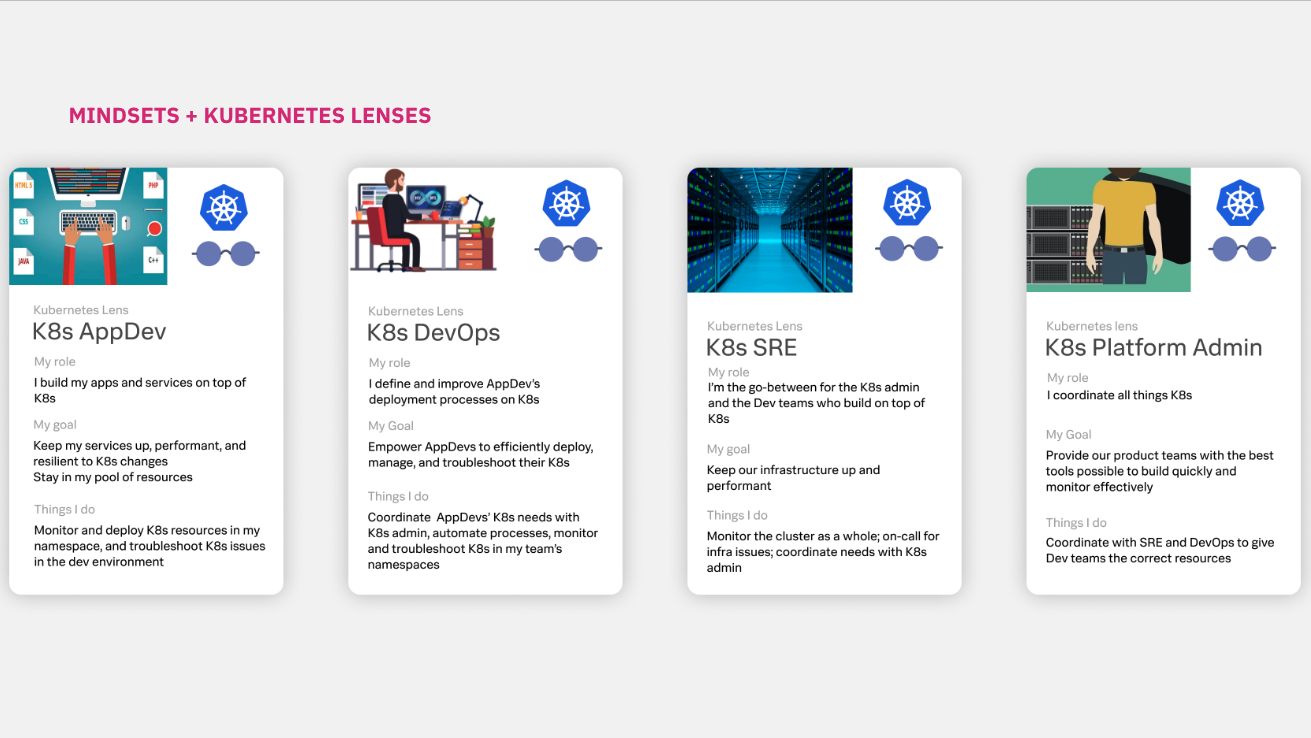

At the time, Splunk’s product teams had been referencing a list of 50+ personas — in a collaborative effort, the User Research team set out to audit these personas, standardize them, reduce their number, and evangelize our new “persona” list.

The result was “User Mindsets,” a more flexible way to represent the mindset different users have when using Splunk products. We used the GDI project as a pilot for these mindsets. One user might embody multiple mindsets — or “wear different hats” — at different times in their product usage.

Slides from a “Personas as Hats” deck to propose new Mindset framework.

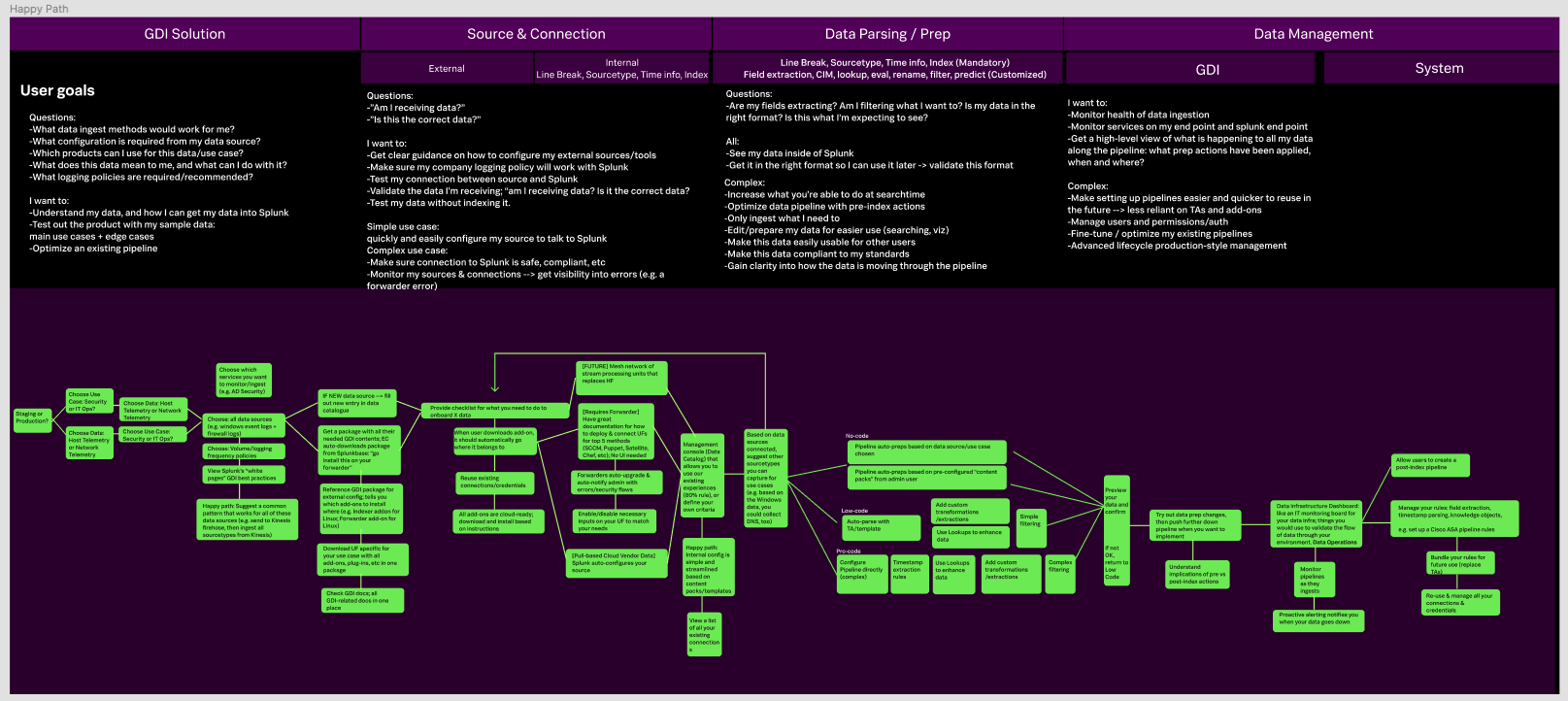

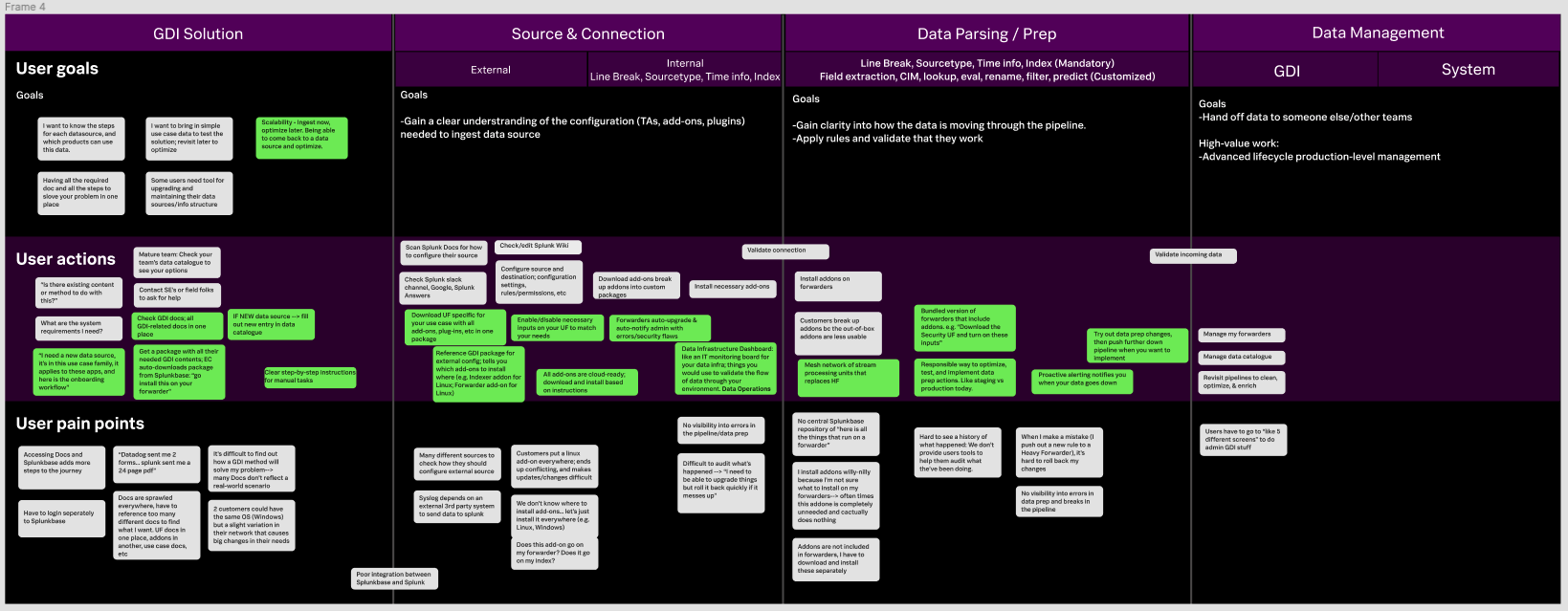

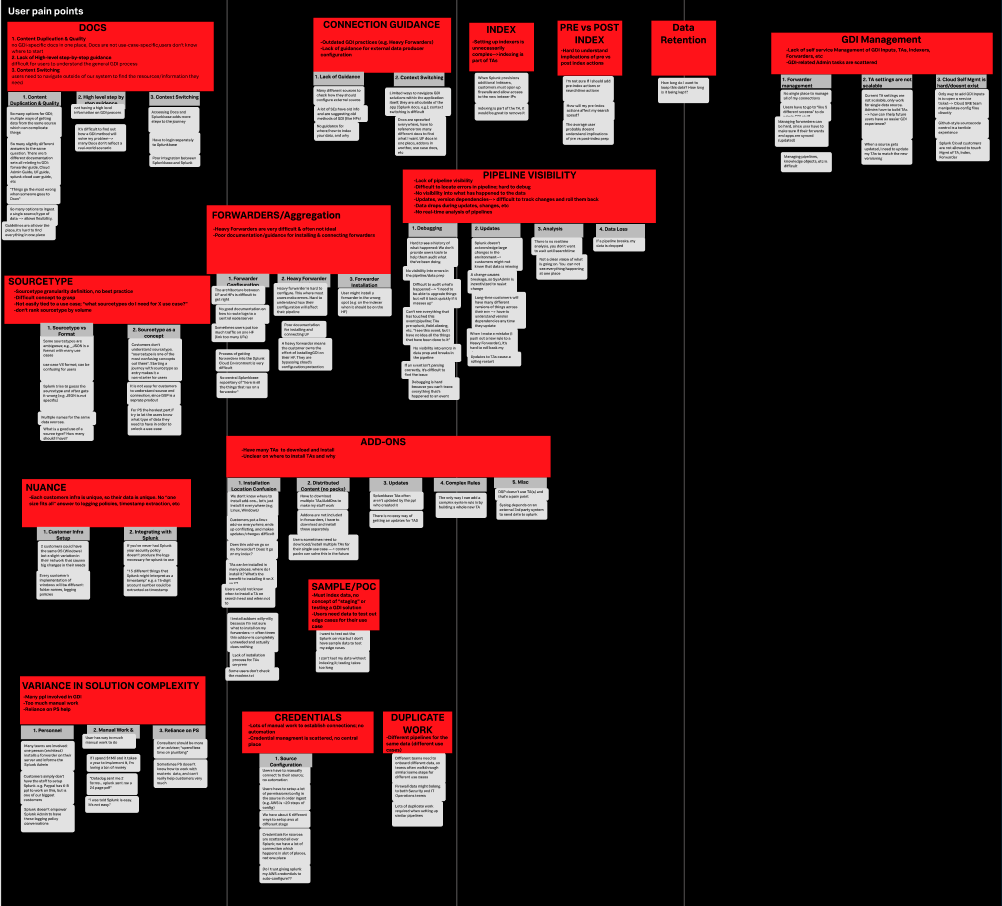

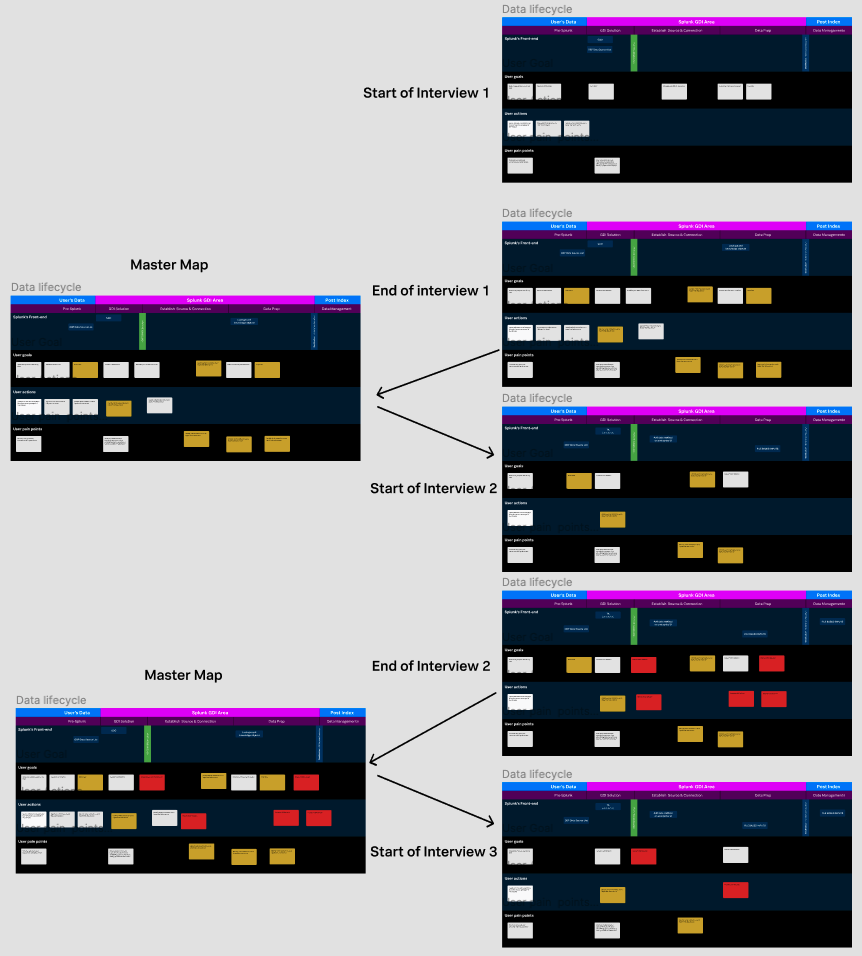

3. service mapping | internal interviews with sme’s

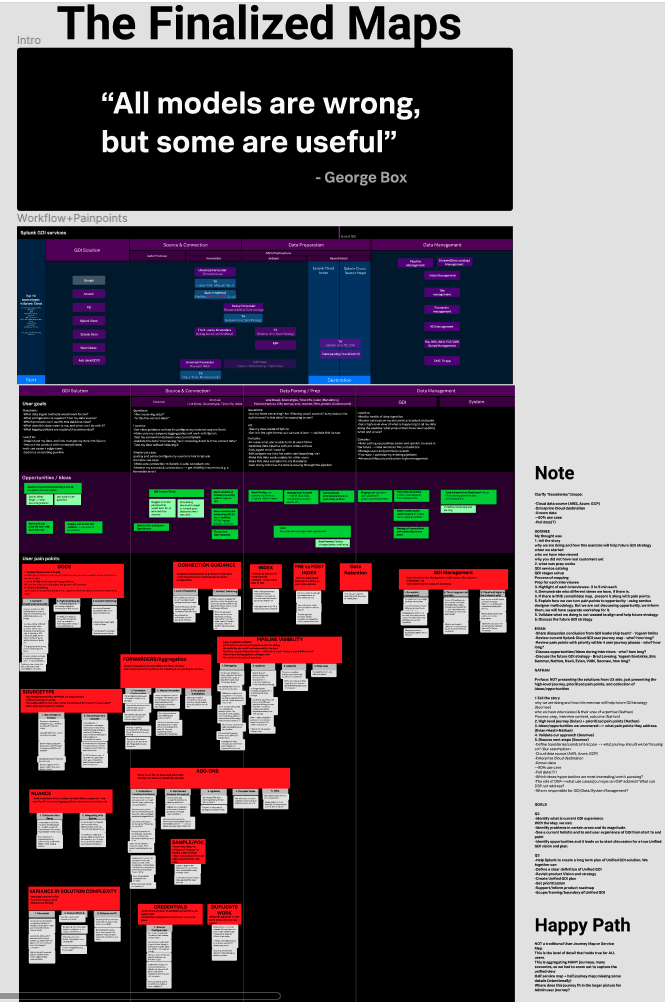

To better understand the GDI pain points, we first had to understand the data journey through Splunk’s backend services. To do so, we interviewed 12 GDI experts across PM, Architecture, Engineering, Design, and Solutions Engineering.

In each 90-minute interview, we mapped out the Splunk services and the pain points users experience with them.

After each interview, we combined the resulting service map into a “master map.” By interviewing a wide range of experts, we were able to piece together the complex service map of Splunk GDI.

Example output from service mapping exercises.

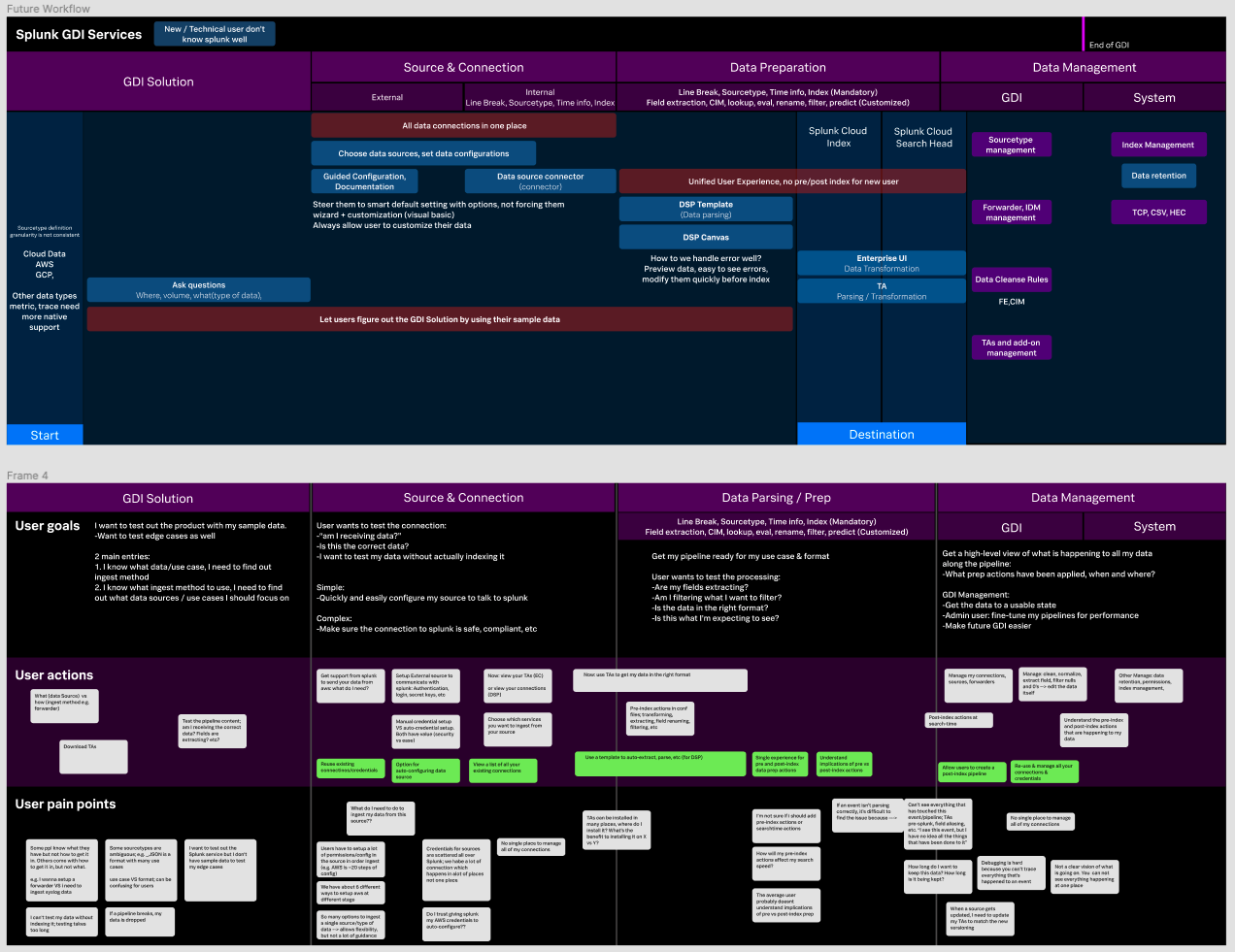

4. map the improved workflow

The team took our service map + list of pain points and began applying it to a specific GDI workflow: ingesting AWS data sources. I placed the relevant pain points along the workflow, and designers indicated how the solution addressed each pain point.

5. usability testing

We applied the improved workflow to the live product, and tapped into our Admin Database (from step 1) to find the right participants. I facilitated sessions with these admins to identify usability issues & develop a set of tactical design principles that we could apply to other GDI workflows.

key findings, insights, and recommendations

These are just a few of many findings. The full report is packed full with more insights, quotes, highlight clips, and recommendations. Please contact me if you’d like to learn more.

INSIGHT 1:

Admin users need to see through the “black box” to understand how Splunk’s services will interact with their environment.

Our users are very technical, and have significant concerns as to the impact of the workflow’s script commands on their environment. This is especially true of admins from security and government use cases. Rather than just describing what is happening , we need to be very clear about why each script command exists.

“I’m on the ops team… I know all the services. The less black box-y, the better”

“I want a summary of what is going on, not how”

“Did this thing create a HEC token in the background? Why? How does this work with Splunk Cloud?”

“Why am I creating an S3 bucket?”

If we are unclear about the purpose and reasoning behind each step, admins might forego the process altogether due to security and stability concerns.

GDI Design Principle: Strip away the black box by informing users of the why behind each step in the process.

Note: In science, computing, and engineering, a black box is a device, system, or object which produces useful information without revealing any information about its internal workings.

INSIGHT 2:

Admin users need to know details about the workflow before they begin, or we risk losing trust and scaring them away.

This workflow is not compatible for every customer’s environment — some users only discovered that the workflow is incompatible with their environment after ~30 minutes of work. For these users, they would rather ingest data through the Command Line Interface.

“My company isn’t big on IAM users so I wish there was an alternative”

“we don’t allow a local user with a secret key”

“We don’t support AWS CLI V2 yet”

“I could’ve done this in 10 minutes in the AWS Console”

If we expose the innerworkings & requirements up front, we can save our users time & effort while building trust.

GDI Design Principle: Inform users up-front about requirements, processes, output, and alternatives.

These are just a few of many findings. The full report is packed full with more insights, quotes, highlight clips, and recommendations. Please contact me if you’d like to learn more.

impact

A few notable impacts:

The video I created for the Hackathon project. I wrote the script, animated it, did the voiceover, and edited it. Design created by Yiyun Zhu and Hasti Khaki. Engineering demo created by Ajeet Kumar. Character sprites designed by Tatsuya Hama. (Please excuse the audio and lighting changes — I made this video in 3 hours to meet the tight deadline!) If the video doesn’t load, click here.

Service map, pain points, and happy path were used by the GDI product teams to inform future GDI workflow improvements for other data sources

Immediate improvements to the AWS Data Ingest workflow based on service map and usability findings

A set of design principles were generated to guide future GDI projects

The “happy path” service/journey map inspired a hackathon project by myself, 2 designers on the team, and an engineer. This hackathon video won an award in the hackathon contest (see video to the right).

Mindsets piloted on this project were adopted by other product teams, including Observability (which I was later moved to)

The GDI participant pool that I created from the survey responses was a source of participant recruitment for the GDI team for the next 12+ months

challenges & learnings

There were so many stakeholders on the project, which made the scope huge. At first, we tried catering to everyone and had little success. We found success when we began applying our new workflow & principles to individual product teams. Start small, then move outward from there.

Revamping personas for an entire company is a difficult undertaking, especially when the org is understaffed on user researchers who could help product teams implement the new model. I became obsessed with the personas-to-mindsets project as a passion of mine, and spent too much time trying to get buy-in. I finally found success by teaming up with another User Researcher to implement mindsets in her specific product umbrella: Splunk Observability.

“Personas” will never go away. Trying to change the language to “mindsets” is a fruitless effort. However, most people don’t actually know what goes into a persona or how to use them. I could’ve continued using the word “persona” but provided a new template. In essence, I should’ve trojan horse-d the mindset model into the existing persona language. Rather than convincing people to adopt a new framework, I could’ve just adjusted the existing framework.

thank you…

This was such a difficult and complex undertaking that was only made possible with constant collaboration between the team.

Soomee Kang | Design Manager

Soomee was open to any research approach that I suggested, and was supportive every step of the way, including her willingness to adopt User Mindsets for the project.

Yiyun Zhu | Principal UX Designer

Yiyun’s grasp of the technical side of Splunk GDI was crucial in creating a detailed service map of Splunk’s backend, as well as identify unique opportunities to simplify complex workflows.

Hasti Khaki | Sr. Product Designer

Hasti is a team player and a talented designer who is willing to take risks and explore new design concepts, and knows how to use design to tell a story.

Clark Mullen | Principal Product Designer

Despite not working on the GDI team, Clark loved the User Mindsets model and immediately hopped on board to get buy-in across the design team. His visionary attitude and openness to changing the status quo makes him a fantastic design partner.